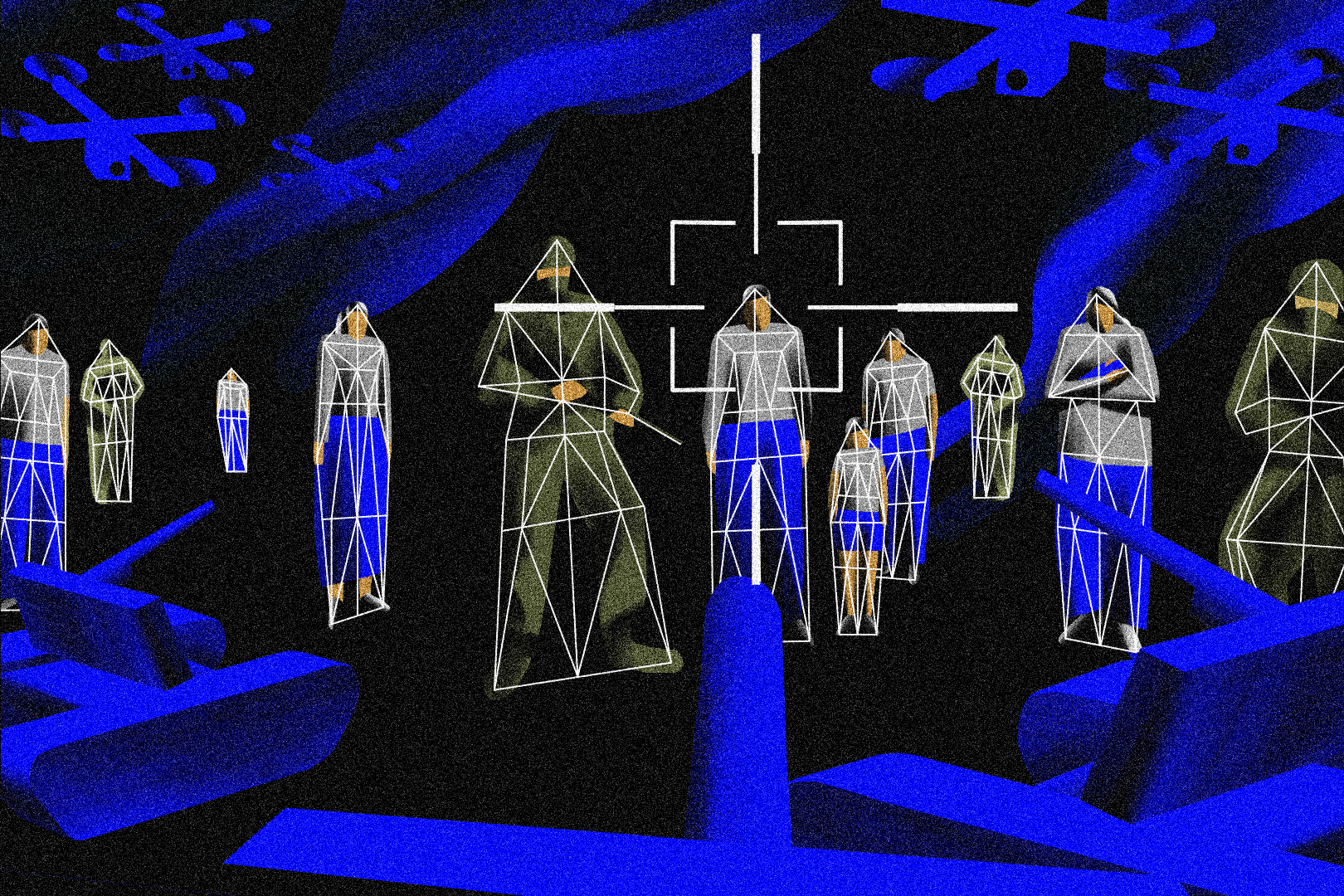

It is often said that autonomous weapons could help minimize the needless horrors of war. Their vision algorithms could be better than humans at distinguishing a schoolhouse from a weapons depot. They won’t be swayed by the furies that lead mortal souls to commit atrocities; they won’t massacre or pillage. Some ethicists have long argued that robots could even be hardwired to follow the laws of war with mathematical consistency.

And yet for machines to translate these virtues into the effective protection of civilians in war zones, they must also possess a key ability: They need to be able to say no.

Read the article on the Bulletin of the Atomic Scientists